Op-Ed: Designing Company Operations in an AI-Native World

As artificial intelligence (AI) advances and adoption increases, its impact is no longer limited to individual tools or isolated use cases.

As artificial intelligence (AI) advances and adoption increases, its impact is no longer limited to individual tools or isolated use cases. The most consequential changes are occurring at the level of company-wide operations, across where AI is deployed, who is delivering it, and how it is being built. This is reflected in how organisations are structured, how teams execute, and how products are launched and sustained over time. AI is reshaping the fundamentals of organisation design itself. This is a major driver behind the estimated 37.6% CAGR of the enterprise AI market through to 2030, as researched by Grand View Research.

Within organisations that are actively working to operationalise AI in real production environments, success is not informed by model performance alone, but primarily by whether AI systems can be integrated into existing workflows, governed responsibly, and trusted to support mission-critical work. As the fastest-spreading technology in history, reaching over 1.2 billion users in less than 3 years, AI is rapidly disseminating through organiSations. Adapting in an AI-native world is critical and requires a strong understanding of the biggest fundamental shifts and prioritisation.

Where AI is Deployed: From Experimentation to Operational Infrastructure

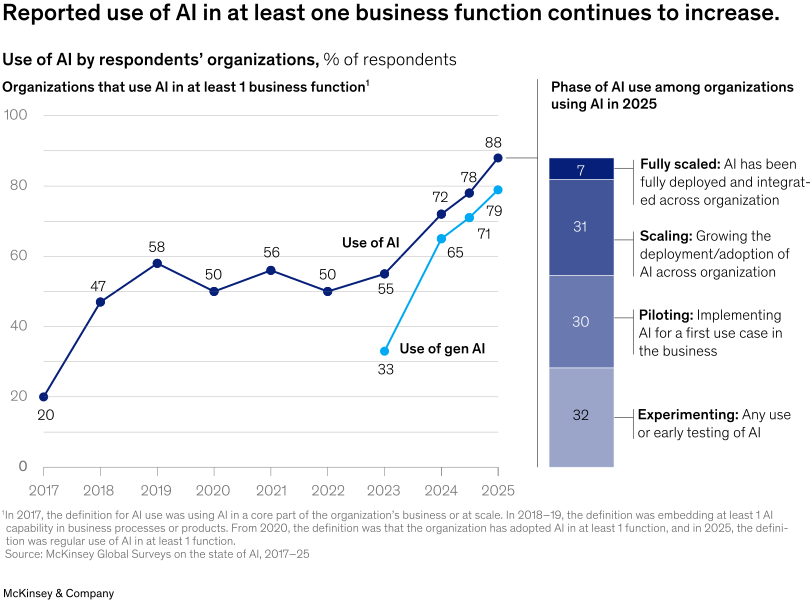

One of the most significant shifts in the industry today is the move from treating AI as an experimental capability to embedding it directly into core operating infrastructures. According to McKinsey & Company, 88% of companies now report regular use of AI in at least one business function, and 38% are already scaling or fully scaled. In earlier adoption phases, AI was often explored through standalone tools, disconnected from how work is actually performed. However, this reflects a rapid transition towards AI becoming a foundational component of how organiS+sations operate.

When AI is treated as part of an organisation’s operational backbone, it unlocks value across hiring decisions, workflow design, and organisational economics. Rather than relying primarily on headcount expansion, high-performing teams should increasingly examine how AI can add leverage to existing employees. A study by Google found that administrative workers can save 122 hours per year using AI. Scaled across an organisation, this provides significant leverage. At a macro level, the UK alone estimates $533B in economic growth driven by AI.

This shift does not necessitate or encourage wholesale role replacement. Instead, the use cases of AI with the clearest economic value today are to automate repeatable internal tasks, such as data processing, workflow orchestration, and system coordination. When designed around concrete business needs and integrated into systems that matter, these applications increase team capacity and resilience, enabling organisations to operate and scale more effectively.

Who is Delivering AI? The Importance of External Operators in Execution

Many organisations face a real constraint: AI is moving faster than most teams can. Larger enterprises take time to redesign operational systems to keep pace with rapidly evolving technological changes, while smaller startups that can move more quickly often experience resource limitations. As a result, companies must increasingly rely on agencies and professional services firms as long-term operating partners rather than short-term execution support. According to Precedence Research, the professional services market is forecasted to reach $3.04T by 2034, a 3x increase from its size in 2025. This growth is accelerated by the professional services industry moving quickly to adopt AI.

Technological adoption has the potential to move fastest when flexible and accessible technology, such as AI, is paired with experienced operators who can translate new capabilities into reliable, maintainable systems. In these cases, external partners can function as expert extensions of organisations. Making this model work requires organisations to invest in clean, well-documented data infrastructure as a shared foundation for effective partnership.

How AI is Being Built: Product Launches as Living Systems

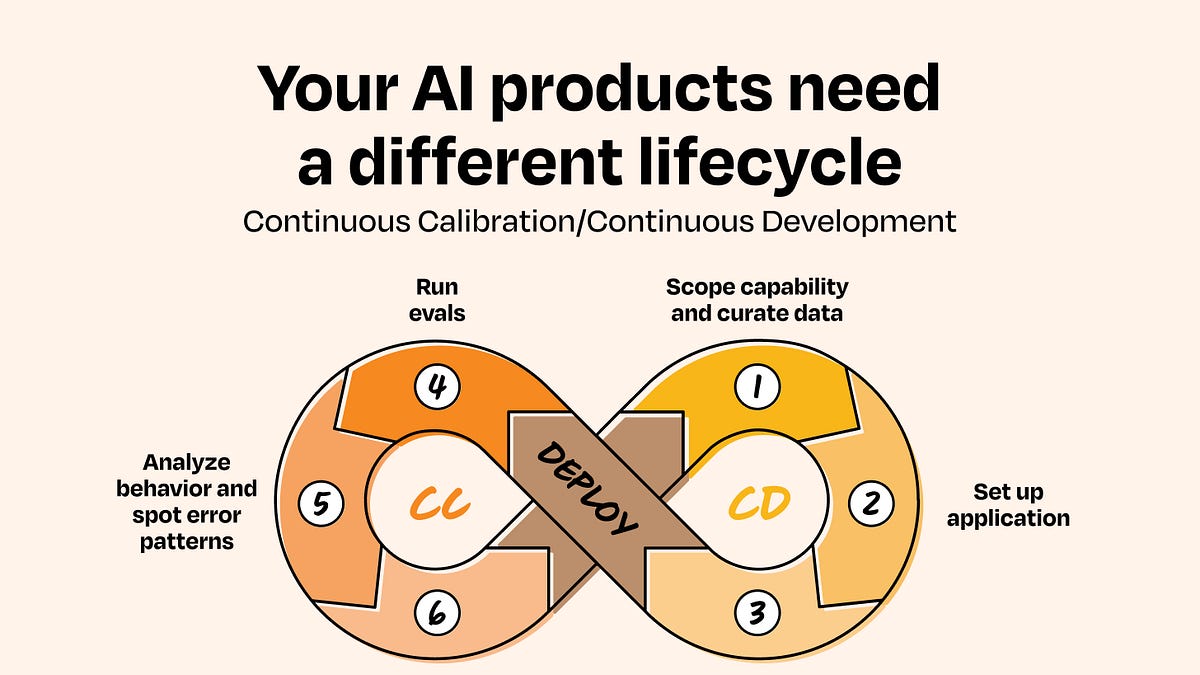

With the constant and unpredictable evolution of LLMs, companies must adapt a core engine of their growth: how products are launched and maintained. Traditional software launches follow a linear progression. Teams set roadmaps and timelines and can reliably estimate when launches will take place, within a margin of error. By contrast, AI products reach launch readiness on a difficult-to-define timeline, where time and effort cannot always be mapped to outcomes.

Source: Lenny’s Newsletter

Source: Lenny’s Newsletter

Additionally, AI products do not launch and stop. They continue to learn and adapt after deployment, fundamentally altering how teams define readiness and success. In practice, this will require organisations to have tighter feedback loops between product development and customer use cases. A critical component is building upon how organisations already “dogfood” their products today: testing systems internally before external release. This kind of launch process builds internal confidence and surfaces edge cases early, which is critical given the long tail of certain AI use cases and outputs. According to Andreessen Horowitz, the development costs and failure rates for AI systems could be 3-5x higher, given long-tail behaviors. At a global level, the National Institute of Standards and Technology has developed the Artificial Intelligence Risk Management Framework.

Getting started With AI: the VIO Scoring Framework

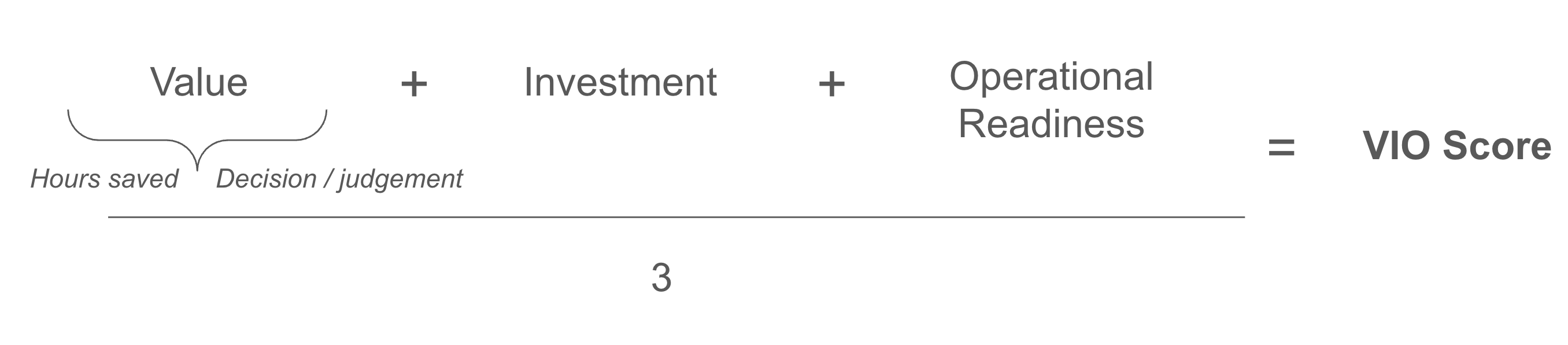

Transitioning to AI-native operations is a multi-stage operating shift, not a single decision to deploy a tool. While most organisations are overwhelmed by the opportunity that AI provides, many don’t know where to start. I developed the VIO scoring model was developed to help organisations prioritise processes and workflows to embed AI:

Value: how much economic value does this provide the organisation?

-Hours saved

-How high frequency is this?

-How long does one job take?

-Decision / judgement leverage

-Are there outcomes that compound over time?

-Is there a judgement component that cannot be easily replicated by a human?

Investment: how much time and resources will it take to realise impact?

-How complex is the integration?

-How much change management is required?

-How many resources are required?

Operational Readiness: how ready are data and systems for AI?

-Is the data digitised, accessible and reasonably clean?

-Are inputs and outputs observable and logged?

-Is there clear ownership of the data and systems?

To operationalise the VIO framework, assign a score of 1-10 for Value, Investment, and Operational Readiness, then calculate an overall VIO score by averaging the three. Depending on organisational priorities, these dimensions can be weighted differently. Processes can then be ranked by their VIO scores to guide sequencing decisions, providing a clear, structured approach towards AI-native operations.

Implications for Egyptian Companies

Implications for Egyptian Companies

These dynamics and outcomes are not reserved for large or US-based technology companies. Organisations in emerging markets are, in fact, positioned to capture outsised value. With model providers such as OpenAI and Anthropic providing open access to foundational models, AI is a democratising technology. Companies without decades of software sprawl, which are more common in emerging markets, can adopt AI-native operating models without the burden of legacy systems.

Despite the speed of AI adoption, organisations globally remain in the early stages of realising the highest value use cases or redesigning company operations around AI. Organisations that understand underlying shifts and put operational plans in motion can still build a durable lead. By establishing clear ownership, governance structures and integration patterns early, emerging market organisations can compete as global leaders.

Karim El Sewedy is an Egyptian Operator who has introduced AI-related products and programs to support companies during their operational transition. His role places him close to how companies are adapting their operating models as AI moves from testing into production environments.

El Sewedy is currently at Retool, a Series-C growth-stage startup backed by Sequoia Capital, whose low-code platform enables companies to build internal tools by connecting databases, APIs, and other systems to streamline operations. By leading the rollout of the company’s AI Agents offering and growth of its Assist product, he has shaped solutions adopted by large technology organisations, including teams at companies such as Amazon, Stripe, OpenAI, and Lyft.

- Previous Article بعد فترة ابتعاد عن الساحة الفنية «Villain» جديد بعنوان EPعفروتو يطلق

- Next Article Domestic Winter Flight Bookings in Saudi Arabia Rise 64%